Five failed tests for scientific software

Posted on 24 May 2018

Five failed tests for scientific software

By Andrew Walker, Sam Mangham, Robert Maftei, Adam Jackson, Becky Arnold, Sammie Buzzards, and Eike Mueller

By Andrew Walker, Sam Mangham, Robert Maftei, Adam Jackson, Becky Arnold, Sammie Buzzards, and Eike Mueller

This post is part of the Collaborations Workshops 2018 speed blogging series.

Good practice guides demand tests are written alongside our software and continuous integration coupled to version control is supposed to enable productivity, but how do these ideas relate to the development of scientific software? Here we consider five ways in which tests and continuous integration can fail when developing scientific software in an attempt to illustrate what could work in this context. Two recurring themes are that scientific software is often large and unwieldy—sometimes taking months to run on the biggest computer systems in the world—, and that its development often goes hand in hand with scientific research. There isn’t much point writing a test that runs for weeks and then produces an answer when you have no idea if the answer is correct. Or is there?

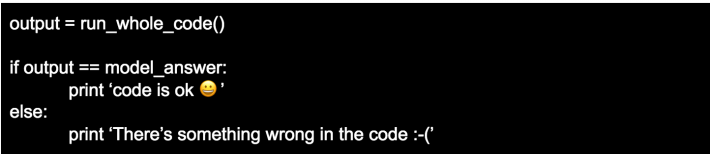

1. Test fails but doesn’t say why

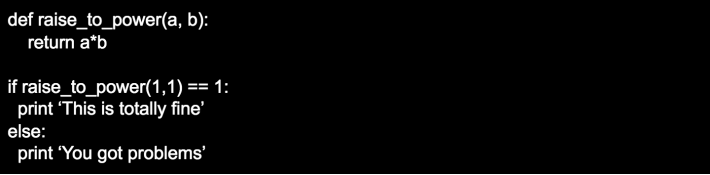

This one kind of explains itself. If the test doesn’t tell you what’s wrong, how are you going to fix it? Testing either needs to be for a specific purpose within the code so you know what’s broken if it doesn’t work, or it needs to have detailed and helpful output to help locate an error (or ideally both!). Toolkits such as Python’s unittest framework provide a breakdown of which named tests passed and failed; the smaller your tests, the easier it is to find where the bugs are.

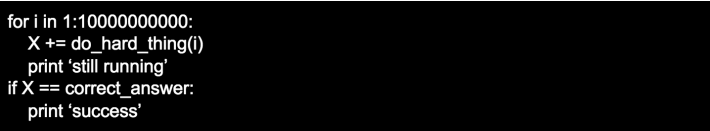

2. Test that’s still running six weeks later

Developers hate interruptions. Tests need to run quickly or people won’t bother running them! Longer tests may be necessary in some cases, in which case it can be a good idea to separate them and run them in a scheduled way. Continuous integration services, such as Travis CI, can guard against people “forgetting” to run these,and get it done in the background while they are working on their next crazy idea.

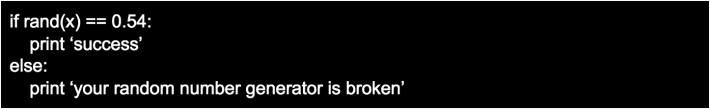

3. Randomness in results

Randomness is a powerful scientific tool and stochastic methods are used in many fields. Reproducibility of stochastic results is an interesting problem for science in general, but when it comes to programming we have some ways around it. The “pseudo-random” number generators used in these codes produce a series of values based on some initial seed. If you set this seed manually during testing, you should get the same answer every time!

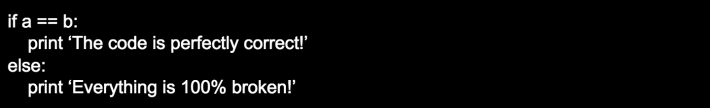

4. Test is excessively precise

Any simulation is going to have limits on its fidelity, either as a result of the code (e.g. single/double precision floats) or on the accuracy of the data that goes into it. The equations that are used will also contain simplifications that cause a loss of precision. For stochastic codes, there’s even fundamental limits on the accuracy of the output. A test that requires absolute equivalence is never going to be satisfied; and one that requires an unrealistic level of precision isn’t either. If there could be ±20% errors on your input data points, writing your code to achieve a 1% level of agreement in some comparison between adjacent points isn’t a productive use of time. Worse, it gives a false sense of precision; you can’t possibly truly know the answer to that level of accuracy!

Where possible, acknowledging errors in your data source and method is the best strategy.

5. Overly-idealised test case?

One of the simplest way of testing for correctness is by running the code for a problem in which the “exact” solution is available. This could be a setup for which an analytical solution is known or particularly simple, for example it might have a certain symmetry. While this definitely checks the correctness for this particular case and helps to find some errors, it usually does not cover the more general problem which the code is meant to solve. Overly-idealised tests could still be useful if they run just for part of the entire test suite and the user is aware of their limited power. Tests of this form could for example be complemented by others which check conservation properties and invariants of physical systems that can be tested even in general cases.

Wrap up

As we can see, poorly thought-out tests can cause a great deal of trouble and confusion! It is important to construct your tests with care and to consider:

-

Does the test return useful information?

-

Will this test be used: is it simple to run, can it be run in an acceptable amount of time?

-

Is the test reliable, does it return the same output each time?

-

Is it accurate? Could it fail or succeed when it shouldn’t?

-

Does the test suite cover all the scenarios the code may be applied to?