Towards software that supports interpretation rather than quantification

Posted on 4 April 2017

Towards software that supports interpretation rather than quantification

By Heather Ford, University of Leeds.

My research involves the study of the emerging relationships between data and society that is encapsulated by the fields of software studies, critical data studies and infrastructure studies, among others. These fields of research are primarily aimed at interpretive investigations into how software, algorithms and code have become embedded into everyday life, and how this has resulted in new power formations, new inequalities, new authorities of knowledge [1]. Some of the subjects of this research include the ways in which Facebook’s News Feed algorithm influences the visibility and power of different users and news sources (Bucher, 2012), how Wikipedia delegates editorial decision-making and moral agency to bots (Geiger and Ribes, 2010), or the effects of Google’s Knowledge Graph on people’s ability to control facts about the places where they live (Ford and Graham, 2016).

As the only Software Sustainability Institute fellow working in this area, I set myself the goal of investigating what tools, methods and infrastructure researchers working in these fields were using to conduct their research. Although Big Data is a challenge for every field of research, I found that the challenge for social scientists and humanities scholars doing interpretive research in this area is unique and perhaps even more significant. Two key challenges stand out. The first is that data requiring interpretation tends to be much larger than traditionally analysed. This often requires at least some level of quantification in order to ‘zoom out’ to obtain a bigger picture of the phenomenon or issues under study. Researchers in this tradition often lack the skills to conduct such analyses, particularly at scale. The second challenge is that online data is subject to ethical and legal restrictions, particularly when research involves interpretive research (as opposed to the anonymised data collected for statistical research).

In many universities, it seems that mathematics, engineering, physics and computer science departments have started to build internal infrastructure to deal with Big Data, and some universities have established good Digital Humanities programs that are largely about the quantitative study of large corpuses of images/films/videos or other cultural objects. But infrastructure and expertise is severely lacking for those wishing to do interpretive rather than quantitative research using mixed, experimental, ethnographic or qualitative research using online data. The software and infrastructure required for doing interpretive research is patchy, departments are typically ill-equipped to support researchers and students with the expertise required to conduct social media research, and significant ethical questions remain about doing social media research, particularly in the context of data protection laws.

Data Carpentry offers some promise here. I organised, with the support of the Software Sustainability Institute, a "Data Carpentry for the Social Sciences workshop" with Dr Brenda Moon (Queensland University of Technology) and Martin Callaghan (University of Leeds) in November 2016 at Leeds University. Data Carpentry workshops tend to be organised for quantitative work in the hard sciences and there were no lesson plans for dealing with social media data. Brenda stepped in to develop some of these materials based partly on the really good Library Carpentry resources and both Martin and Brenda (with additional help from Dr Andy Evans, Joanna Leng and Dr Viktoria Spaiser) made an excellent start towards seeding the lessons database with some social media specific exercises.

The two-day workshop centred on examples from Twitter data and participants worked with Python and other off-the-shelf tools to extract and analyse data. There were fourteen participants in the workshop ranging from PhD students to professors and from media and communications to sociology and social policy, music to law, earth and environment to translation studies. At the end of the workshop participants said that they felt they had received a strong grounding in Python and that the course was useful, interactive, open and not intimidating. There were suggestions, however, to make improvements to the Twitter lessons and to perhaps split up the group in the second day to move onto more advanced programming for some and to go over the foundations for beginners.

Also supported by the Institute was my participation in two conferences in Australia at the end of 2016. The first was a conference exploring the impact of automation on everyday life at the Queensland University of Technology in Brisbane; the second, the annual Crossroads in Cultural Studies conference in Sydney. Through my participation in these events (and via other information-gathering that I have been conducting in my travels) I have learned that many researchers in the social sciences and humanities suffer from a significant lack of local expertise and infrastructure. On multiple occasions I learned of PhD students and researchers running analyses of millions of tweets on their laptops, suffering from a lack of understanding when applying for ethical approval and conducting analyses that lack a consistent approach.

Centers of excellence in digital methods around the world share code and learnings where they can. One such program is the Digital Methods Initiative (DMI) at the University of Amsterdam. The DMI hosts regular summer and winter schools to train researchers in using digital methods tools and provides free access to some of the open source software tools that it has developed for collecting and analysing digital data. Queensland University of Technology’s Social Media Group also hosts summer schools and has contributed to methodological scholarship employing interpretive approaches to social media and internet research. The common characteristic of such programmes are that they are collaborative (sharing resources across the university departments and between different universities) and innovative (breaking some of the traditional rules that govern traditional research in the university).

Many researchers who handle data in more interpretive studies tend to rely on these global hubs in the few universities where infrastructure is being developed. The UK could benefit from a similar hub for researchers locally, especially since software and code needs to be continually developed and maintained for a much wider variety of evolving methods. Alternatively, or alongside such hubs, Data Carpentry workshops could serve as an important virtual hub for sharing lesson plans and resources. Data Carpentry could, for example, host code that can be used to query APIs for doing social media research and workshops could also be used to collaboratively explore or experiment with methods for iterative, grounded investigation of social media practices.

Due to the rapid increase in the scale and velocity of social media data and because of the lack of technical expertise to manage such data, social scientists and humanities scholars have taken a backseat to the hard sciences in explaining new dimensions of social life online. This is disappointing because it means that much of the research coming out about social media, Big Data and the computation lacks a connection to important social questions about the world. Building from some of this momentum will be essential in the next few years if we are to see social scientists and humanities scholars adding their important insights into social phenomena online. Much more needs to be done to build flexible and agile resources for the rapidly advancing field of social media research if we are to benefit from the contributions of social science and humanities scholars in the field of digital cultures and politics.

[1] For an excellent introduction to the contribution of interpretive scholars to questions about data and the digital see ‘The Datafied Society’ just published by Amsterdam University Press

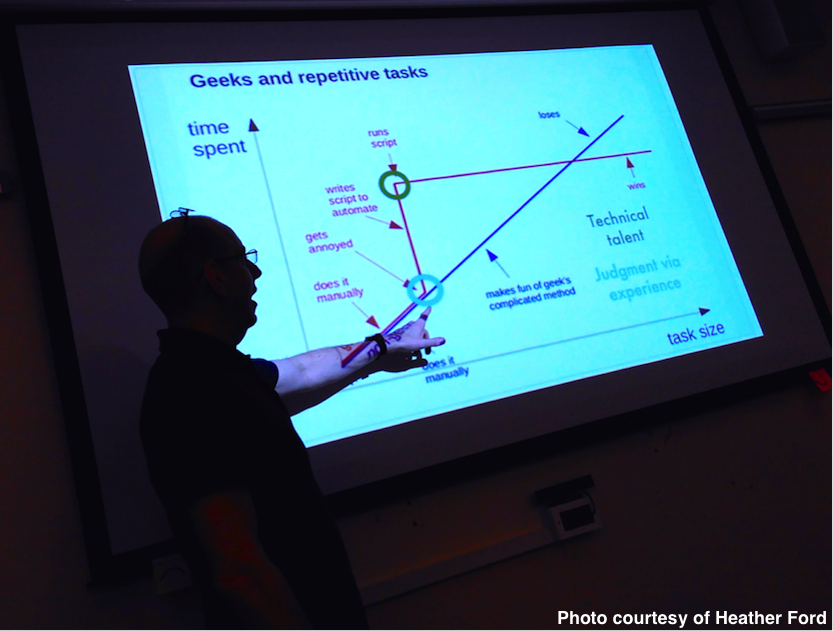

Picture: Martin Callaghan displays the ‘Geeks and repetitive tasks’ model during the November 2016 Data Carpentry for the Social Sciences workshop at Leeds University.