Beyond technical fixes: the role of research software engineering in creating fairer and accountable socio-technical systems

Beyond technical fixes: the role of research software engineering in creating fairer and accountable socio-technical systems

Posted on 5 August 2021

Beyond technical fixes: the role of research software engineering in creating fairer and accountable socio-technical systems

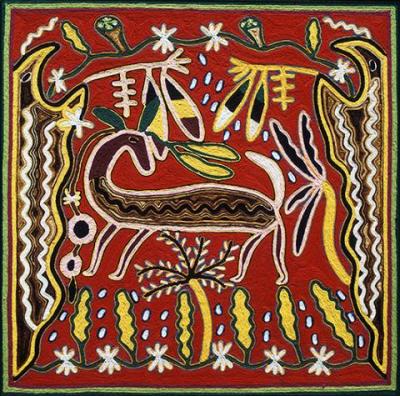

Wixárika art The Sacred Snare by Juan Ríos Martínez

Wixárika art The Sacred Snare by Juan Ríos Martínez

By 2021 SSI Fellow Yadira Sánchez.

This is an introduction to the ideas I want to bring to life during my Fellowship and some of the reasons why. I would like to think that my plan is based on further reimagining the computational sciences collectively, something already being done by other researchers. The plan includes organising workshops and carpentries with colleagues across different disciplines and with communities (outside academia) with these aims in mind:

1) to co-create free, open source prototypes of technological artefacts based on “sociotechnical imaginaries”

2) to build actionable guidelines that will enable critiques of broader sociotechnical systems to prevent, detect and mitigate algorithmic harms within research software engineering

3) draft practical steps we can take as researchers and software engineers, or anyone using/creating software within and outside academia, that can help to proactively take accountability for the sociotechnical systems we create and are part of - to go beyond the trap of technical fixes.

Why beyond technical fixes?

When thinking about software and machine learning tools making decisions, the “training” is widely used as an example of explanation, which involves exposing a computer to a bunch of data and then this computer learns to make judgments and predictions based on the patterns it notices. This is often connected with the argument that bad data leads to bias and so on. But this thinking seems to stay within the boundaries of technical fixes.

If we think of data from a scientific research point of view, we expect it to have some kind of formal research scientific processes to identify bias, where different demographics, contexts and other representations have been carefully considered, limitations and challenges have been weighed and the results are peer-reviewed by different disciplines concerned. However this is not necessarily the case when it comes to AI-based systems.

Questions like: “What student gets the A-level grades needed to go to X University?”, “Which community needs more police presence?”, “Is your credit score good enough?” are some examples being answered by algorithms that we now know are biased and have caused harm, more specifically 'systematic algorithmic harm' and 'algorithmic injustice'.

There is a robust body of research in the area of algorithmic injustice showing how algorithmic tools embed and perpetuate societal and historical biases and injustices1. There is also an argument for how “bias” has been misused as an individual perception that can be technically fixed, deviating our discussions and not adequately prioritising, naming and locating the systematic harms of the technologies we build.

While bias is human, these biases stop being just individual biases and become systematic structures when they have the power to become entrenched in decision making processes and policies that end up harming groups of people and communities.

Acknowledging that these systematic harms exist is key; challenging them and co-creating different imaginaries even more so. Our software systems and machine learning models are created under current social structures that perpetuate systematic harm, and going beyond the “bias” argument towards a critique of broader sociotechnical systems in which they are created might give us a better idea of what we are dealing with and how to go about it.

Research in algorithmic fairness is large but focuses on technical solutions which are necessary but still superficial. There are already efforts in the AI community to increase awareness, avoid and correct discriminatory bias in algorithms while also making them accountable and equitable: Algorithmic Justice League; Just Data Lab; Data Privacy Lab; Data for Black Lives; Black in AI.

Since research software engineering is a close work between researchers from many disciplines and software engineering, this space has the potential to create more equitable and accountable algorithmic systems while also reimagining and co-creating different sociotechnical imaginaries.

However, our desires to create research software that is equitable and accountable will more often than not face systematic barriers, either from a research culture that hinders collaborative work across disciplines and researchers, lack of open and transparent research processes and/or because it may upset the status quo.

On an individual level, as researchers and software engineers working with data and software on a daily basis we can work towards more equitable and accountable systems by drawing the bigger picture into our everyday work.

Cognitive scientists Abeba Birhane and Fred Cummins' paper “Algorithmic Injustices: Towards a Relational Ethics” offer grounds to do so: 1) centring the disproportionately impacted; 2) prioritisation of understanding over prediction; 3) algorithms as more than tools that create and sustain certain social order; 4) bias, fairness and justice are moving targets2.

Curation, documentation and accountability are also important to have in mind. Computational linguist Emily Bender gives insight into potential approaches when working with datasets and machine learning models: 1) budget for documentation and only collect as much data as can be documented; 2) no documentation is a potential for harm without remedy; 3) avoid documentation debt - datasets both undocumented and too big to document after; 4) while documentation of the problem is an important first step, it is not a solution; 5) if we are probing for bias, we need to know what biases we are looking for and what social categories - this requires knowing who are the marginalized ones; 6) probing for bias actually requires local input for the context of deployment of the technology.

On a collective level, calls for accountability within our institutions are viable and tangible ways to support researchers and engineers who face censorship in their research. The firing of Dr Timnit Gebru has brought a lot of important issues to many tables in academia and the tech industry; including of course the role of broader systemic racism in technology and research.

The work of No Tech For Tyrants, a student-led, activist, UK-based organisation that organises, researches and campaigns to dismantle the violence infrastructure at the intersection of ethics, technology and societal impacts, have designed some resources that can help current students (can apply to research software engineers too), in severing the links between oppressive technologies and institutions of concern.

Free, open source science, data and/or software also offer viable ways to make fairer and more transparent systems, allowing other research scientists to reproduce, reuse and reanalyse the data. Free, open source software, data and research have been used to bring communities (outside academia) to get involved in the process too, for advocacy and social justice purposes3.

I also believe research software engineering is a collective effort of disciplines coming together and without the input of social scientists, debaters, creative and critical thinkers, research software engineering will struggle in adapting to humanity. The inequalities created by data-driven decision making will only become deeper unless multi-disciplinary thinking, community engagement and debate are embedded in research software engineering production.

Bibliography

1 In Birhane & Cummins, 2019 | Weapons of math destruction…; Seeing without knowing…; Race after technology…

2 A more recent paper by Abeba Birhane has been published | Perspective algorithmic injustice: A Relational Ethics Approach

3 Police brutality at the Black lives matter protests; Mapping police violence across the USA; María Salguero is filling in the gaps left by official data on gender-related killings in Mexico

Birhane, A & Cummins, F. (2019) Algorithmic injustices: Towards a relational ethics, NeurIPS2019.

Coldicutt, R. (2018) Ethics won’t make software engineering better.

Dave, K. (2019) Systemic algorithmic harms. Data & Society.

Jaspreet (2019) Understanding and Reducing Bias in Machine Learning. Towards Data Science.