March of the Titans: generating Dinosaur locomotion with Virtual Robotics

Posted on 29 November 2013

March of the Titans: generating Dinosaur locomotion with Virtual Robotics

By Dr Bill Sellers, researcher at the Faculty of Life Sciences, University of Manchester.

By Dr Bill Sellers, researcher at the Faculty of Life Sciences, University of Manchester.

This article is part of our series, a day in the software life, in which we ask researchers from all disciplines to discuss the tools that make their research possible.

Making Dinosaurs move is fun. As anyone who's seen Jurassic Park will tell you, extinct megafauna is a sight to behold. That doesn't mean there is any scientific basis behind what we see at the movies. All too often, the animators produce something that they think looks credible, but this is hardly good science.

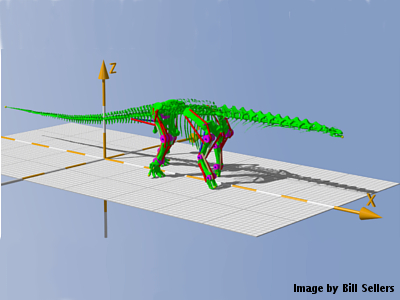

Fortunately, there is a far more accurate alternative: virtual robotics. Put simply, if you treat your fossilised beast as a machine that needs testing, and as long as you know the mechanical properties of its bones, you should be able to accurately simulate how it moved.We went about this with the remains of Argentinosaurus huinculensis, which, at 73 tonnes, is the largest known Dinosaur to have walked the Earth. The first step was to get the creature into the computer. We used a Lidar scanner to capture between 10 and 20 scans around the skeleton, as we needed at least 100 decent photographs – preferably 500 – for a reasonable reconstruction. Next, the digital model went through various stages of clean up using either commercial products, like Geomagic, or free ones like MeshLab, until we ended up with a skeletal model that we were all happy with.

In particular, the point cloud generated by the scanning process needed to be converted into a surface mesh consisting of between 1,000 to 10,000 polygons per bone, although more complex shapes such as the skull needed even more. These mesh objects were then imported into a suitable CAD package such as 3DS Max or Blender and aligned to produce a reference skeleton in a standard pose.

The rest of the work was done using our open-source simulation software, GaitSym. This program gives us the wrappers needed to simulate muscles and other important body parts. It is written in C++ so it can be easily ported to a number of HPC platforms.

C++ was also one of the reasons behind choosing Qt as a development framework. Most of our work is done using Apple Mac computers but I have never found Objective C or Objective C++ all that much to fun to program with. I like a compiler that can spot my errors, and I find all that nesting of squared brackets makes code relatively hard to read. Qt is great in that I can do almost all the coding in C++ and it has some excellent higher level user interface elements. Its only downside is that it looks ugly and the interface is not as responsive as a native interface would be. Of course the biggest plus is that we can create both Linux and Windows versions with minimal extra effort.

The biggest computational challenge, however, is the machine learning aspect that works via the command line. We used a genetic algorithm approach to drive the muscles in the simulation. This was very flexible as it meant we didn’t have to make any assumptions about the type of gait that we would generate. However it was also very slow because we need to run the simulation many times.

This was a deliberate design decision. There are other groups working in the area who provide much tighter constraints on how the animal can move, which makes the process much quicker. However, it also means you only ever get the answers you wanted in the first place. Such an approach may be fine if you are fairly sure how a fossil animal moved – such as an early hominid for example - but if you have an animal whose anatomy is unlike that of any modern animal (as in most Dinosaurs), then it is a dangerous assumption to make.

Our end results, by contrast, were impressive and demonstrated a highly probable locomotion model for Argentinosaurus. Not only that, but it required minimal subjective input and produced useful mechanical outputs that can be used for other work. Best of all, of course, is the fact that our software lets this magnificent Dinosaur walk for the first time in over 93 million years!