Software Sustainability in Remote Sensing

Posted on 5 May 2016

Software Sustainability in Remote Sensing

By Robin Wilson, Geography and Environment & Institute for Complex Systems Simulation, University of Southampton.

By Robin Wilson, Geography and Environment & Institute for Complex Systems Simulation, University of Southampton.

This is the third in a series of articles by the Institute's Fellows, each covering an area of interest that relates directly both to their own work and the wider issue of software's role in research.

1. What is remote sensing?

Remote sensing broadly refers to the acquisition of information about an object remotely (that is, with no physical contact). The academic field of remote sensing, however, is generally focused on acquiring information about the Earth (or other planetary bodies) using measurements of electromagnetic radiation taken from airborne or satellite sensors. These measurements are usually acquired in the form of large images, often containing measurements in a number of different parts of the electromagnetic spectrum (for example, in the blue, green, red and near-infrared), known as wavebands. These images can be processed to generate a huge range of useful information including land cover, elevation, crop health, air quality, CO2 levels, rock type and more, which can be mapped easily over large areas. These measurements are now widely used operationally for everything from climate change assessments (IPCC, 2007) to monitoring the destruction of villages in Darfur (Marx and Loboda, 2013) and the deforestation of the Amazon rainforest (Kerr and Ostrovsky, 2003).

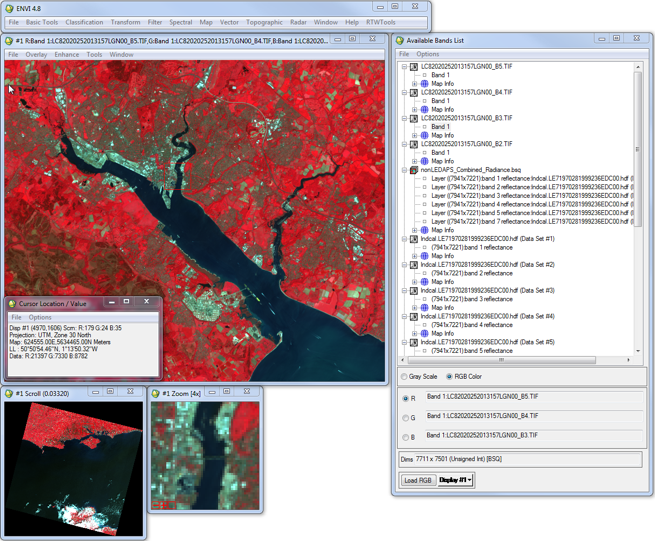

Figure 1: Landsat 8 image of Southampton, UK shown as a false-colour composite using the Near-Infrared, Red and Green bands. Vegetation is bright red, heathland and bare ground is beige/brown, urban areas are light blue, and water is dark blue.

The field, which crosses the traditional disciplines of physics, computing, environmental science and geography, developed out of air photo interpretation work during World War II, and expanded rapidly during the early parts of the space age. The launch of the first Landsat satellite in 1972 provided high-resolution images of the majority of the Earth for the first time, producing repeat images of the same location every 16 days at a resolution of 60m. In this case, the resolution refers to the level of detail, with a 60m resolution image containing measurements for each 60x60m area on the ground. Since then, many hundreds of further Earth Observation satellites have been launched which take measurements in wavelengths from ultra-violet to microwave at resolutions ranging from 50km to 40cm. One of the most recent launches is that of Landsat 8, which will contribute more data to an unbroken record of images from similar sensors acquired since 1972, now at a resolution of 30m and with far higher quality sensors. The entire Landsat image archive is now freely available to everyone - as are many other images from organisations such as NASA and ESA - a far cry from the days when a single Landsat image cost several thousands of dollars.

2. You have to use software

Given that remotely sensed data are nearly always provided as digital images, it is essential to use software to process them. This was often difficult with the limited storage and processing capabilities of computers in the 1960s and 1970s - indeed, the Corona series of spy satellites operated by the United States from 1959 to 1972 used photographic film which was then sent back to Earth in a specialised ‘re-entry vehicle’ and then developed and processed like standard holiday photos!

However, all civilian remote-sensing programs transmitted their data to ground stations on Earth in the form of digital images, which then required processing by both the satellite operators (to perform some rudimentary geometric and radiometric correction of the data) and the end-users (to perform whatever analysis they wanted from the images).

In the early days of remote sensing, computers didn’t even have the ability to display images onscreen, and the available software was very limited (see Figure 2a). Nowadays remote sensing researchers use a wide range of proprietary and open-source software, which can be split into four main categories:

-

Specialist remote-sensing image processing software, such as ENVI, Erdas IMAGINE, eCogniton, Opticks and Monteverdi

-

Geographical Information System software which provides some remote-sensing processing functionality, such as ArcGIS, IDRISI, QGIS and GRASS

-

Other specialist remote-sensing software, such as SAMS for processing spectral data, SPECCHIO for storing spectral data with metadata, DART (Gastellu-Etchegorry et al., 1996) for simulating satellite images and many numerical models including 6S (Vermote et al., 1997), PROSPECT and SAIL (Jacquemoud, 1993).

-

General scientific software, including numerical data processing tools, statistical packages and plotting packages.

|

|

Figure 2: Remote sensing image processing in the early 1970s (a) and 2013 (b). The 1970s image shows a set of punched cards and an ‘ASCII-art’ printout of a Landsat image of Southern Italy, produced by E. J. Milton as part of his PhD. The 2013 image shows the ENVI software viewing a Landsat 8 image of Southampton on a Windows PC

I surveyed approximately forty people at the Remote Sensing and Photogrammetry Society’s Annual Student Meeting 2013 to find out how they used software in their research, and this produced a list of 19 pieces of specialist software which were regularly used by the attendees, with some individual respondents regularly using over ten specialist tools.

Decisions about which software to use are often based on experience gained through university-level courses. For example, the University of Southampton uses ENVI and ArcGIS as the main software in most of its undergraduate and MSc remote sensing and GIS courses, and many of its students will continue to use these tools significantly for the rest of their career. Due to the limited amount of teaching time, many courses only cover one or two pieces of specialist software, thus leaving students under-exposed to the range of tools which are available in the field. There is always a danger that students will learn that tool rather than the general concepts which will allow them to apply their knowledge to any software they might need to use in the future

I was involved in teaching a course called Practical Skills in Remote Sensing as part of the MSc in Applied Remote Sensing and GIS at the University of Southampton which tried to challenge this, by introducing students to a wide range of proprietary and open-source software used in remote sensing, particularly software which performs more specialist tasks than ENVI and ArcGIS. Student feedback showed that they found this very useful, and many went on to use a range of software in their dissertation projects.

2.1 Open Source Software

In the last decade there has been huge growth in open-source GIS software, with rapid developments in tools such as GIS (a GIS display and editing environment with a similar interface to ArcGIS) and GRASS (a geoprocessing system which provides many functions for processing geographic data and can also be accessed through GIS). Ramsey (2009) argues that the start of this growth was caused by the slowness of commercial vendors to react to the growth of online mapping and internet delivery of geospatial data, and this created a niche for open-source software to fill. Once it had a foothold in this niche, open-source GIS software was able to spread more broadly, particularly as many smaller (and therefore more flexible) companies started to embrace GIS technologies for the first time.

Unfortunately, the rapid developments in open-source GIS software have not been mirrored in remote sensing image processing software. A number of open-source GIS tools have specialist remote sensing functionality (for example, the i.* commands in GRASS), but as the entire tool is not focused on remotely sensed image processing they can be harder to use than tools such as ENVI. Open-source remote sensing image processing tools do exist (for example, Opticks, OrfeoToolbox, OSSIM, ILWIS and InterImage), but they tend to suffer from common issues for small open-source software projects, specifically: poor documentation; complex and unintuitive interfaces; and significant unfixed bugs.

In contrast, there are a number of good open-source non-image-based remote sensing tools, particularly those used for physical modelling (for example, 6S (Vermote et al., 1997), PROSPECT and SAIL (Jacquemoud, 1993)), processing of spectra (SAMS and SPECCHIO (Bojinski et al., 2003; Hueni et al., 2009)), and as libraries for programmers (GDAL, Proj4J).

Open source tools are gradually becoming more widely used within the field, but their comparative lack of use amongst some students and researchers compared to closed-source software may be attributed to their limited use in teaching. However, organisations such as the Open Source Geospatial Laboratories (OSGL: www.osgeo.org) are helping to change this, through collating teaching materials based on open-source GIS at various levels, and there has been an increase recently in the use of tools such as Quantum GIS for introductory GIS teaching.

3. Programming for remote sensing

There is a broad community of scientists who write their own specialist processing programs within the remote sensing discipline, with 80% of the PhD students surveyed having programmed as part of their research. In many disciplines researchers are arguing for increased use and teaching of software, but as there is no real choice as to whether to use software in remote sensing, it is the use and teaching of programming that has become the discussion ground.

The reason for the significantly higher prevalence of programming in remote sensing compared to many other disciplines is that much remote sensing research involves developing new methods. Some of these new methods could be implemented by simply combining functions available in existing software, but most non-trivial methods require more than this. Even if the research is using existing methods, the large volumes of data used in many studies make processing using a GUI-based tool unattractive. For example, many studies in remote sensing use time series of images to assess environmental change (for example, deforestation or increases in air pollution) and, with daily imagery now being provided by various sensors, these studies can regularly use many hundreds of images. Processing all of these images manually would be incredibly time-consuming, and thus code is usually written to automate this process. Some processing tools provide ‘macro’ functionality which allow common tasks to be automated, but this is not available in all tools (for example, ENVI) and is often very limited. I recently wrote code to automate the downloading, reprojecting, resampling and subsetting of over 7000 MODIS Aerosol Optical Thickness images, to create a dataset of daily air pollution levels in India over the last ten years: creating this dataset would have been impossible by hand!

The above description suggests two main uses of programming in remote sensing research:

-

Writing code for new methods, as part of the development and testing of these methods

-

Writing scripts to automate the processing of large volumes of data using existing methods

3.1 Programming languages: the curious case of IDL and the growth of Python

The RSPSoc questionnaire respondents used languages as diverse as Python, IDL, C, FORTRAN, R, Mathematica, Matlab, PHP and Visual Basic, but the most common of these were Matlab, IDL and Python. Matlab is a commercial programming language which is commonly used across many areas of science, but IDL is generally less well-known, and it is interesting to consider why this language has such a large following within remote sensing.

IDL’s popularity stems from the fact that the ENVI remote sensing software is implemented in IDL, and exposes a wide range of its functionality through an IDL Application Programming Interface (API). This led to significant usage of IDL for writing scripts to automate processing of remotely-sensed data, and the similarity of IDL to Fortran (particularly in terms of its array-focused nature) encouraged many in the field to embrace it for developing new methods too. Although first developed in 1977, IDL is still actively developed by its current owner (Exelis Visual Information Solutions) and is still used by many remote sensing researchers, and taught as part of MSc programmes at a number of UK universities, including the University of Southampton.

However, I feel that IDL’s time as one of the most-used languages in remote sensing is coming to an end. When compared to modern languages such as Python (see below), IDL is difficult to learn, time-consuming to write and, of course, very expensive to purchase (although an open-source version called GDL is available, it is not compatible with the ENVI API, and thus negates one of the main reasons for remote sensing scientists to use IDL).

Python is a modern interpreted programming language which has an easy-to-learn syntax - often referred to as ‘executable pseudocode’. There has been a huge rise in the popularity of Python in a wide range of scientific research domains over the last decade, made possible by the development of the numpy (‘numerical python’ (Walt et al., 2011)), scipy (‘scientific python’ (Jones et al., 2001)) and matplotlib (‘matlab-like plotting’ (Hunter, 2007)) libraries. These provide efficient access to and processing of arrays in Python, along with the ability to plot results using a syntax very similar to Matlab. In addition to these fundamental libraries, over two thousand other scientific libraries are available at the Python Package Index (http://pypi.python.org), a number of which are specifically focused on remote-sensing.

Remote sensing data processing in Python has been helped by the development of mature libraries of code for performing common tasks. These include the Geospatial Data Abstraction Library (GDAL) which provides functions to load and save almost any remote-sensing image format or vector-based GIS format from a wide variety of programming languages including, of course, Python. In fact, a number of the core GDAL utilities are now implemented using the GDAL Python interface, rather than directly in C++. Use of a library such as GDAL gives a huge benefit to those writing remote-sensing processing code, as it allows them to ignore the details of the individual file formats they are using (whether they are GeoTIFF files, ENVI files or the very complex Erdas IMAGINE format files) and treat all files in exactly the same way, using a very simple API which allows easy loading and saving of chunks of images to and from numpy arrays.

Another set of important Python libraries used by remote-sensing researchers are those originally focused on the GIS community, including libraries for manipulating and processing vector data (such as Shapely) and libraries for dealing with the complex mathematics of projections and co-ordinate systems and the conversion of map references between these (such as the Proj4 library, which has APIs for a huge range of languages). Just as ENVI exposes much of its functionality through an IDL API; ArcGIS, Quantum GIS, GRASS and many other tools expose their functionality through a Python API. Thus, Python can be used very effectively as a ‘glue language’ to join functionality from a wide range of tools together into one coherent processing hierarchy.

A number of remote-sensing researchers have also released very specialist libraries for specific sub-domains within remote-sensing. Examples of this include the Earth Observation Land Data Assimilation System (EOLDAS) developed by Paul Lewis (Lewis et al., 2012), and the Py6S (Wilson, 2012) and PyProSAIL (Wilson, 2013) libraries which I have developed, which provide a modern programmatic interface to two well-known models within the field: the 6S atmospheric radiative transfer model and the ProSAIL vegetation spectral reflectance model.

Releasing these libraries - along with other open-source remote sensing code - has benefited my career, as it has brought me into touch with a wide range of researchers who want to use my code, helped me to develop my technical writing skills and also led to the publication of a journal paper. More importantly than any of these though, developing the Py6S library has given me exactly the tool that I need to do my research. I don’t have to work around the features (and bugs!) of another tool - I can implement the functions I need, focused on the way I want to use them, and then use the tool to help me do science. Of course, Py6S and PyProSAIL themselves rely heavily on a number of other Python scientific libraries - some generic, some remote-sensing focused - which have been realised by other researchers.

The Py6S and PyProSAIL packages demonstrate another attractive use for Python: wrapping legacy code in a modern interface. This may not seem important to some researchers - but many people struggle to use and understand models written in old languages which cannot cope with many modern data input and output formats. Python has been used very successfully in a number of situations to wrap these legacy codes and provide a ‘new lease of life’ for tried-and-tested codes from the 1980s and 1990s.

3.2 Teaching programming in remote sensing

I came into the field of remote sensing having already worked as a programmer, so it was natural for me to use programming to solve many of the problems within the field. However, many students do not have this prior experience, so rely on courses within their undergraduate or MSc programmes to introduce them both to the benefits of programming within the field, and the technical knowledge they need to actually do it. In my experience teaching on these courses, the motivation is just as difficult as the technical teaching - students need to be shown why it is worth their while to learn a complex and scary new skill. Furthermore, students need to be taught how to program in an effective, reproducible manner, rather than just the technical details of syntax.

I believe that my ability to program has significantly benefited me as a remote-sensing researcher, and I am convinced that more students should be taught this essential skill. It is my view that programming must be taught far more broadly at university: just as students in disciplines from Anthropology to Zoology get taught statistics and mathematics in their undergraduate and masters-level courses, they should be taught programming too. This is particularly important in a subject such as remote sensing where programming can provide researchers with a huge boost in their effectiveness and efficiency. Unfortunately, where teaching is done, it is often similar to department-led statistics and mathematics courses: that is, not very good. Outsourcing these courses to the Computer Science department is not a good solution either - remote sensing students do not need a CS1-style computer science course, they need a course specifically focused on programming within remote sensing.

In terms of remote sensing teaching, I think it is essential that a programming course (preferably taught using a modern language like Python) is compulsory at MSc level, and available at an undergraduate level. Programming training and support should also be available to researchers at PhD, Post-Doc and Staff levels, ideally through some sort of drop-in ‘geocomputational expert’ service.

4. Reproducible research in remote sensing

Researchers working in ‘wet labs’ are taught to keep track of exactly what they have done at each step of their research, usually in the form of a lab notebook, thus allowing the research to be reproduced by others in the future. Unfortunately, this seems to be significantly less common when dealing with computational research - which includes most research in remote sensing. This has raised significant questions about the reproducibility of research carried out using ‘computational laboratories’, which leads to serious questions about the robustness of the science carried out by researchers in the field - as reproducibility is a key distinguishing factor of scientific research from quackery (Chalmers, 1999).

Reproducibility is where the automating of processing through programming really shows its importance: it is very hard to document exactly what you did using a GUI tool, but an automated script for doing the same processing can be self-documenting. Similarly, a page of equations describing a new method can leave a lot of important questions unanswered (what happens at the boundaries of the image? how exactly are the statistics calculated?) which will be answered by the code which implements the method.

A number of papers in remote sensing have shown issues with reproducibility. For example, Saleska et al. (2007) published a Science paper stating that the Amazon forest increased in photosynthetic activity during a widespread drought in 2005, and thus suggested that concerns about the response of the Amazon to climate change were overstated. However, after struggling to reproduce this result for a number of years, Samanta et al. (2010) eventually published a paper showing that the exact opposite was true, and that the spurious results were caused by incorrect cloud and aerosol screening in the satellite data used in the original study. If the original paper had provided fully reproducible details on their processing chain - or even better, code to run the entire process - then this error would likely have been caught much earlier, hopefully during the review process.

I have personally encountered problems reproducing other research published in the remote sensing literature - including the SYNTAM method for retrieving Aerosol Optical Depth from MODIS images (Tang et al., 2005), due to the limited details given in the paper, and the lack of available code, which has led to significant wasted time. I should point out, however, that some papers in the field deal with reproducibility very well. Irish et al. (2000) provide all of the details required to fully implement their revised version of the Landsat Automatic Cloud-cover Assessment Algorithm (ACCA), mainly through a series of detailed flowcharts in their paper. The release of their code would have saved me re-implementing it, but at least all of the details were given.

5. Issues and possible solutions

The description of the use of software in remote sensing above has raised a number of issues, which are summarised here:

-

There is a lack of open-source remote-sensing software comparable to packages such as ENVI or Erdas Imagine. Although there are some tools which fulfil part of this need, they need an acceleration in development in a similar manner to Quantum GIS to bring them to a level where researchers will truly engage. There is also a serious need for an open-source tool for Object-based Image Analysis, as the most-used commercial tool for this (eCognition) is so expensive that it is completely unaffordable for many institutions.

-

There is a lack of high-quality education in programming skills for remote-sensing students at undergraduate, masters and PhD levels.

-

Many remote sensing problems are conceptually easy to parallelise (if the operation on each pixel is independent then the entire process can be parallelised very easily), but there are few tools available to allow serial code to be easily parallelised by researchers who are not experienced in high performance computing.

-

Much of the research in the remote sensing literature is not reproducible. This is a particular problem when the research is developing new methods or algorithms which others will need to build upon. The development of reproducible research practices in other disciplines has not been mirrored in remote sensing, as yet.

Problems 2 & 4 can be solved by improving the education and training of researchers - particularly students - and problems 1 & 3 can be solved by the development of new open-source tools, preferably with the involvement of active remote sensing researchers. All of these solutions, however, rely on programming being taken seriously as a scientific activity within the field, as stated by the Science Code Manifesto (http://sciencecodemanifesto.org).

6. Advice

The first piece of advice I would give any budding remote sensing researcher is learn to program! It isn’t that hard - honestly! - and the ability to express your methods and processes in code will significantly boost your productivity, and therefore your career.

Once you’ve learnt to program, I would have a few more pieces of advice for you:

-

Script and code things as much as possible, rather than using GUI tools. Graphical User Interfaces are wonderful for exploring data - but by the time you click all of the buttons to do some manual processing for the 20th time (after you’ve got it wrong, lost the data, or just need to run it on another image) you’ll wish you’d coded it.

-

Don’t re-invent the wheel. If you want to do an unsupervised classification then use a standard algorithm (such as ISODATA or K-means) implemented through a robust and well-tested library (like the ENVI API, or scikit-learn) - there is no point wasting your time implementing an algorithm that other people have already written for you!

-

Try and get into the habit of documenting your code well - even if you think you’ll be the only person who looks at it, you’ll be surprised what you can forget in six months!

-

Don’t be afraid to release your code - people won’t laugh at your coding style, they’ll just be thankful that you released anything at all! Try and get into the habit of making research that you publish fully reproducible, and then sharing the code (and the data if you can) that you used to produce the outputs in the paper.

References

Bojinski, S., Schaepman, M., Schläpfer, D., Itten, K., 2003. SPECCHIO: a spectrum database for remote sensing applications. Comput. Geosci. 29, 27–38.

Chalmers, A.F., 1999. What is this thing called science? Univ. of Queensland Press.

Gastellu-Etchegorry, J.P., Demarez, V., Pinel, V., Zagolski, F., 1996. Modeling radiative transfer in heterogeneous 3-D vegetation canopies. Remote Sens. Environ. 58, 131–156. doi:10.1016/0034-4257(95)00253-7

Hueni, A., Nieke, J., Schopfer, J., Kneubühler, M., Itten, K.I., 2009. The spectral database SPECCHIO for improved long-term usability and data sharing. Comput. Geosci. 35, 557–565.

Hunter, J.D., 2007. Matplotlib: A 2D Graphics Environment. Comput. Sci. Eng. 9, 90–95. doi:10.1109/MCSE.2007.55

IPCC, 2007. Climate change 2007: The physical science basis 6, 07.

Irish, R.R., 2000. Landsat 7 automatic cloud cover assessment, in: AeroSense 2000. International Society for Optics and Photonics, pp. 348–355.

Jacquemoud, S., 1993. Inversion of the PROSPECT+ SAIL canopy reflectance model from AVIRIS equivalent spectra: theoretical study. Remote Sens. Environ. 44, 281–292.

Jones, E., Oliphant, T., Peterson, P., others, 2001. SciPy: Open source scientific tools for Python.

Kerr, J.T., Ostrovsky, M., 2003. From space to species: ecological applications for remote sensing. Trends Ecol. Evol. 18, 299–305. doi:10.1016/S0169-5347(03)00071-5

Lewis, P., Gómez-Dans, J., Kaminski, T., Settle, J., Quaife, T., Gobron, N., Styles, J., Berger, M., 2012. An Earth Observation Land Data Assimilation System (EO-LDAS). Remote Sens. Environ. 120, 219–235. doi:10.1016/j.rse.2011.12.027

Marx, A.J., Loboda, T.V., 2013. Landsat-based early warning system to detect the destruction of villages in Darfur, Sudan. Remote Sens. Environ. 136, 126–134. doi:10.1016/j.rse.2013.05.006

Ramsey, P., 2009. Geospatial: An Open Source Microcosm. Open Source Bus. Resour.

Saleska, S.R., Didan, K., Huete, A.R., Da Rocha, H.R., 2007. Amazon forests green-up during 2005 drought. Science 318, 612–612.

Samanta, A., Ganguly, S., Hashimoto, H., Devadiga, S., Vermote, E., Knyazikhin, Y., Nemani, R.R., Myneni, R.B., 2010. Amazon forests did not green-up during the 2005 drought. Geophys. Res. Lett. 37, L05401.

Tang, J., Xue, Y., Yu, T., Guan, Y., 2005. Aerosol optical thickness determination by exploiting the synergy of TERRA and AQUA MODIS. Remote Sens. Environ. 94, 327–334. doi:10.1016/j.rse.2004.09.013

Vermote, E.F., Tanre, D., Davis, J.L., Herman, M., Morcette, J.J., 1997. Second Simulation of the Satellite Signal in the Solar Spectrum, 6S: an overview. IEEE Trans. Geosci. Remote Sens. 35, 675 –686. doi:10.1109/36.581987

Walt, S. van der, Colbert, S.C., Varoquaux, G., 2011. The NumPy Array: A Structure for Efficient Numerical Computation. Comput. Sci. Eng. 13, 22–30. doi:10.1109/MCSE.2011.37

Wilson, R.T., 2013. PyProSAIL.

Wilson, R.T., 2012. Py6S: A Python interface to the 6S Radiative Transfer Model. Comput. Geosci. 51, 166–171.