Workshop sets new Benchmarks

Posted on 11 May 2016

Workshop sets new Benchmarks

By Sarah Mount, Research Associate King’s College London and Institute Fellow

By Sarah Mount, Research Associate King’s College London and Institute Fellow

In many scientific disciplines, experimental methods are well established. Whether the methods in your field are simulations, lab work, ethnographic studies or some other form of testing, commonly accepted criteria for accepting a hypothesis are passed down through the academic generations, and form part of the culture of every discipline. Why then did the Software Sustainaiblity Institute and the Software Development Team feel the need to run #bench16, a one-day workshop on software benchmarking at King’s College London earlier this month?

Broadly accepted methods for establishing the running time of a piece of software (its latency) are not widespread in computer science, which often comes as a surprise to those working in other fields.

Unlike clinicians and ethnographers, computer scientists are in the enviable position of being in full control of their computers, but there are a still a large number of confounding variables which affect latency testing. These can come from the operating system, the programming language, the machine itself, or even from cosmic rays. The statistical properties of these sources of variation are not well understood, and only some confounding variables can be controlled. Having controlled for as much variation as possible, the resulting experimental configuration is likely to be impossible to reproduce on another operating system. Moreover, the literature contains a wide variety of ways to report the results of latency tests -- from averaging a small number of executions of the software, to more sophisticated hypothesis testing. Even questions such as “how many times should I execute my software to get a stable average latency?” have no widely accepted answer. Computer scientists and research software engineers generally agree that this situation needs to be improved, but there is yet to be a broad consensus on which techniques should be widely adopted.

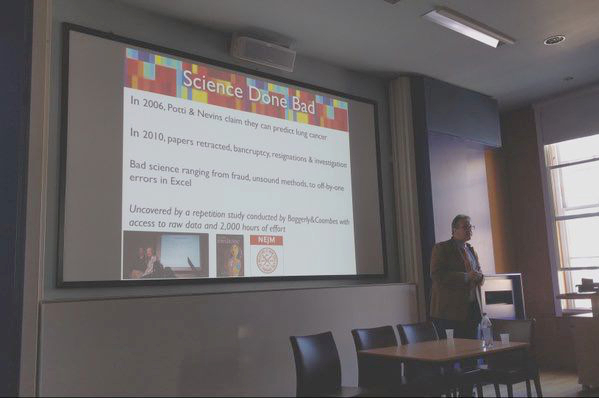

Jan Vitek opened the #bench16 workshop with an overview of the search for repeatability, reproducibility and rigor in his own research on concurrent garbage collection. He asked the provocative question why has there been no scandal in computer science over the rigor of our results?

Edd Barrett and Institute Fellows Joe Parker and James Harold Davenport spoke about the difficulties and advantages of benchmarking in their own areas: software development, bioinformatics and high performance computing, respectively. Edd presented some early results from benchmarks of JIT-compiled language interpreters, which suggests that the accepted understanding of how these programs behave is somewhat naive. Joe asked us why benchmarking is rarely undertaken in bioinformatics, when latency is vital to the workflow of bioinformaticians. James explained how benchmarking HPC hardware enabled the University of Bath to procure a system which was well suited to its users and held the hardware vendors firmly to account.

Tomáš Kalibera, Simon Taylor and Jeremy Bennett all spoke about statistical and experimental methods. Tomáš noted that many benchmarking results are reported in the form of a claim of improvement on a previous result, e.g. this new method shows a 1.5x speed-up compared to … There are many “averages” that can be used here, and several common ways to calculate speed-up - of course some will give much more attractive results than others. Simon gave the workshop an introduction to time series analysis. Benchmarks are currently rarely presented as time series, even though some simple techniques from this area could be widely applicable to latency testing. Jeremy presented some results which used factorial and Plackett-Burman experimental design, two methods which are widely used in engineering and rarely seen in computer science.

A good number of new skills and experimental methods for benchmarking were widely shared at #bench16, and this was one motivation for inviting participants from a broad range of scientific fields. Does the future of benchmarking in computer science hold any hope? Many of the talks referred to the difficulty of funding and enabling the time consuming work of building experimental infrastructure for rigorous latency testing. However, Jan Vitek gave us reason to be hopeful in his keynote talk: a number of computer science conferences are now accepting artefacts, practical submissions that sit alongside papers, with a separate review panel, and provide the reader with a repeatable experiment which matches the findings of the published science.

The #bench16 workshop took place at King’s College London on April 20th 2016, and was chaired by Sarah Mount and Laurence Tratt. The workshop was sponsored by the Software Sustainability Institute and EPSRC, via the Lecture Fellowship. You can find slides for all the #bench16 talks on the event website.

Image of Jan Vitek keynote at #bench16. © Laurence Tratt, King’s College London CC-BY-NC