Super-computing, graphics cards, brains and bees

Posted on 2 September 2014

Super-computing, graphics cards, brains and bees

By Thomas Nowotny, Professor of Informatics at the University of Sussex.

By Thomas Nowotny, Professor of Informatics at the University of Sussex.

This article is part of our series: a day in the software life, in which we ask researchers from all disciplines to discuss the tools that make their research possible.

Computer simulators have transformed almost every aspect of science and technology. From Formula One cars to modern jet engine aircraft, from predicting the weather to the stock market, and to the inner workings of the brain itself, most research and development activities today depend heavily on numerical simulations.

This is thanks to rapid advances over the last decades that have seen computer speeds double every two years. For much of this time, computer speed was raised by both simply shrinking the size of components and doubling the frequency at which central processing units (CPUs) – the workhorse of every computer – would operate. Yet we are now near the limits set by quantum physics that prohibit further advances in this direction. This has lead to a trend to instead focus on parallel architectures, where CPUs still run at the same speed but there are more of them that share the work.

In an unusual turn of events, this has put graphical processing units (GPUs), the co-processors designed to accelerate the display of 3D graphics, in front of CPUs in terms of processing speed. This is because the way pixel colours on different parts of a screen are calculated is largely independent from the other pixels, which means GPUs have been using massively parallel architectures long before the “quantum speed limit” hit CPUs. As a result, modern graphics cards have several thousand computer cores, compared to, at most, a few dozen on CPUs.

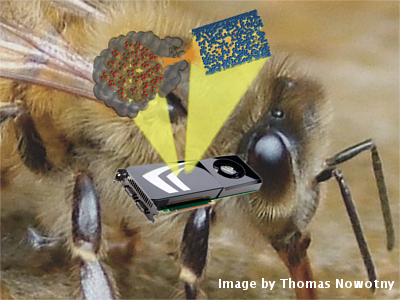

In the Green Brain project, financed by EPSRC, we are learning how to harness the superior computational power of GPUs to build a model of a honeybee’s brain that can run in real time and hence can be used in a flying robot. The project is in collaboration with researchers at the University of Sheffield, who are focusing on the bee’s visual systems and robotics. At Sussex we are working on the olfactory system (as in, the sense of smell) and how to efficiently use GPU hardware to simulate brain activity.

Performing brain simulations on massively parallel GPU hardware poses unique challenges. The brain’s basic building blocks are neurons that connect to each other through millions of contact points, or synapses. All these neurons and synapses operate in parallel, so simulating them on parallel computers makes a great deal of sense. However, no brain structure can exactly match a GPU’s architecture, or vice versa. This makes figuring out how to distribute the work of simulating brain activity on a GPU a very difficult problem, as there is a risk that too much time is wasted in sending data around and between its cores. As a result, we face the risk of having to start from scratch whenever there is a change in the model or in the GPU itself.

As scientists, we prefer to solve a problem once and for all rather than deal with it time and again. We have designed a meta-compiler that will do the difficult housekeeping and optimisation work for us - GPU enhanced Neuronal Networks, or GeNN. This system allows researchers to specify a network model in simple terms of neuron and synapse populations and their associated dynamical equations. This information is then fed, together with information automatically gathered by GeNN about the hardware being used, into a number of heuristics to organise the code for simulating the specified network in an efficient manner.

The result of the process is optimised code for the CUDA, or Common Unified Device Architecture, a programming interface provided by NVIDIA to execute applications on their powerful GPUs. Depending on the details of the brain model and the hardware you own, using GeNN can run your simulation between 10 and 500 times faster than a single core of a contemporary CPU would typically allow. A 500 times speed increase could make the difference between having to wait 10 hours and having a quick coffee until the results of a simulation are available.

The GeNN software is publicly available under the GNU GPL. We have just released the first beta version of GeNN and we hope a large number of computational neuroscientists worldwide will find it of use. Beyond its direct remit in simulating neuronal networks, we also believe that our meta-compiler idea can also be useful for other types of numerical simulations on GPUs.

In the future we plan to look at different types of brain structures as well as non-brain related simulations such as flood models. We have secured funding from the European Union in the framework of the Human Brain Project as well as from the Royal Academy of Engineering and the Leverhulme Trust for future work on GeNN applications.