Seventeen authors, four weeks and one reproducible paper (we hope)

Posted on 21 October 2014

Seventeen authors, four weeks and one reproducible paper (we hope)

By Steve Crouch, Research Software Group Leader, Ian Gent, Olexandr Konovalov and Lars Kotthoff.

By Steve Crouch, Research Software Group Leader, Ian Gent, Olexandr Konovalov and Lars Kotthoff.

Reproducibility is a cornerstone of science, and the first Summer School in Experimental Methodology in Computational Research in August at the University of St Andrews explored the state-of-the-art in methods and tools for enabling reproducible and "recomputable" research.

This included a couple of sessions presented by the Institute on the importance of reproducibility and sustainable software development. However, this School took the notion of reproducibility to a novel extreme. Not only can the technical exploratory case studies be reproduced by others, but a paper jointly written by the organisers and participants during the week that includes these results can be reproduced as well.

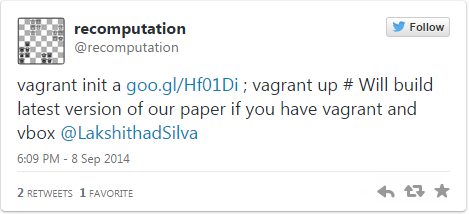

“When we celebrate the publication of a paper, we might link to the submitted version,“ says Ian Gent, the lead organiser of the workshop and author of the Recomputation Manifesto. “Yet here, we can give you instructions for getting the latest version of the paper - which might be very different to the submitted version. Not only that, but you can build it for yourself if you have the tools Vagrant and VirtualBox installed, even though building the paper has dependencies on particular versions of R, related R packages and the LaTeX environment.”

In keeping with the theme of the School, the paper itself is open and reproducible: the process of writing it was captured in a GitHub repository that hosted the source of the paper as well as supplementary codes and data.

By following the instructions - that fit in a tweet! - a virtual machine that rebuilds the paper is automatically downloaded and run in a matter of minutes, leaving the user with a PDF of the paper. This includes running R to re-analyse experimental data from the case studies and produce visualisations, and regeneration of the current paper source that includes those visualisations, via LaTeX into PDF.

The paper reports on several case studies performed during the School that describe what was achieved during the event, including a comparison of University ethical requirements for replicating experiments involving human participants, an investigation into whether a virtualised environment can affect the quality and reproducibility or parallel and distributed experiments, and an experience-based report on the exploration of issues with reproducing non-Computer Science experiments. Again, in keeping with goal of reproducibility, virtual machines are available to allow results from the technical case studies to be reproduced. Following the Summer School, the paper was extended and redrafted over the following three weeks and submitted to a special issue of IEEE Transactions on Emerging Topics in Computing on Reproducible Research Methodologies.

The virtual machines and the submitted version of the paper are publicly available, and the entire history of the paper is also available openly on GitHub. Surely a model to consider for other events that also aim to explore and document issues in reproducibility!